In this article, 𝗔𝗻𝗸𝗶𝘁 𝗔𝘀𝘁𝗵𝗮𝗻𝗮 and 𝗧𝗼𝗺 𝗚𝗿𝗮𝘂𝗽𝗻𝗲𝗿 examine why token-based authorization falls short in AI-driven DevOps environments and how over-privileged, context-blind credentials introduce real operational risk. They present a modern, relationship-based authorization approach using OpenFGA and an Agent Gateway, enabling fast automation while ensuring every action is explicitly governed, explainable, and auditable by design.

AI agents are already running parts of DevOps platform

The real question is: who's actually in control?

Today, AI agents routinely:

- Open pull requests

- Trigger CI/CD pipelines

- Scale Kubernetes workloads

- Respond to incidents

- Increasingly act without a human in the loop

Yet most organizations still authorize these agents using static credentials:

- API keys

- GitHub Personal Access Tokens

- Kubernetes service account tokens

- CI/CD secrets with broad scopes

This creates a dangerous mismatch.

API keys authenticate identity—but DevOps systems need authorization.

Every decision must answer:

Should this agent perform this action,for this user,on this resource,in this environment,at this moment?

That gap is where most automation-driven DevOps incidents originate.

What breaks with token-based authorization

1. Over-privileged automation

A bot needs to create pull requests, so it's given admin access. Now it can also:

- bypass branch protection

- delete repositories

- rotate secrets

- modify production pipelines

One faulty workflow or hallucinated agent action can cause real damage.

2. Context-blind decisions

A Kubernetes automation agent may hold cluster-admin because it needs to scale workloads. But a token can't distinguish:

- staging vs production

- test alert vs real incident

- approved deployment vs unsafe automation

The result: correct credentials, wrong decision.

3. No accountability

An AI agent opens PRs and deploys "on behalf of developers" using a shared identity. Later, no one can answer:

- Who requested this?

- Who approved this?

- Why was it allowed?

From an audit and compliance standpoint, this is a dead end.

What modern DevOps actually needs

Real-world DevOps requirements are consistent:

- ✅ PR creation should be fast and automated

- ✅ Merge to main should be controlled

- ✅ Staging deploys can move quickly

- ✅ Production deploys must be governed

- ✅ Agent actions must be traceable and explainable

- ❌ No agent should inherit "admin" just because it has a token

This isn't an authentication problem.

This is an authorization problem.

The shift: from tokens to relationships

Traditional authorization asks:

"Does this identity have permission X?"

Modern DevOps needs to ask:

"What is the relationship between this user,this agent,this resource,and this environment—right now?"

This is exactly what Relationship-Based Access Control (ReBAC) enables.

OpenFGA is purpose-built to model and evaluate these relationships at runtime.

The DevOps pattern: OpenFGA + Agent Gateway

Modern DevOps platforms rely heavily on AI agents and automation to perform powerful actions—creating pull requests, triggering deployments, and modifying infrastructure.

The challenge isn't how to execute these actions, but how to control them safely.

This pattern introduces an explicit authorization enforcement layer using OpenFGA and an Agent Gateway, rather than granting AI agents a single, highly privileged token.

High-level flow

Here's how the flow works:

- A user (via ChatOps, API, or UI) interacts with an AI Agent.

- The AI Agent sends a request to the Agent Gateway with:

- a JWT identifying the human user

- context about the action (repo, environment, operation)

- The Agent Gateway acts as a centralized enforcement point:

- validates identity and request context

- forwards an authorization query to OpenFGA

- OpenFGA evaluates the request against its relationship-based policy model.

- OpenFGA returns an allow/deny decision.

- If allowed, the Agent Gateway calls:

- GitHub APIs

- CI/CD systems

- Kubernetes APIs

- or other downstream systems

This pattern also aligns naturally with Model Context Protocol (MCP), where the Agent Gateway can act as an MCP server, enforcing OpenFGA-backed authorization over which tools, actions, and execution contexts an AI agent is allowed to access at runtime.

What Each Component Does

AI Agent

- Orchestrates actions and workflows

- Interprets user intent and prepares requests

- Does not decide whether an action is allowed

Agent Gateway

- Central security and control point

- Enforces enterprise policies consistently

- Handles:

- authentication (JWT, TLS/mTLS)

- tracing and access logging

- metrics and observability

- guardrails and request validation

- Asks OpenFGA for authorization before executing any sensitive action

OpenFGA (ReBAC Policy Engine)

- Stores relationship facts (who can do what, where, and with which agent)

- Evaluates authorization requests based on those relationships

- Returns an explicit, explainable decision:

- allow if the relationship exists

- deny if it does not

OpenFGA never executes actions—it only answers:

"Is this request allowed in this context?"

Key Principles

- Tokens still exist —> Tokens execute API calls, but possession alone is never sufficient.

- Every action is explicitly gated —> All sensitive operations pass through the Agent Gateway and require an OpenFGA decision.

- Authorization is relationship-based —> Decisions derive from declarative relationships, not hardcoded conditionals.

- Decisions are explainable and auditable —> Every decision traces back to concrete relationship data.

- No implicit trust for automation —> Agents only act where explicitly approved.

Relationship-based model (what OpenFGA represents)

OpenFGA does not store permissions like "admin", "write", or "deploy" as static flags.

Instead, it stores facts about relationships between entities.

Authorization decisions are derived at request time by evaluating these relationships against an authorization model. This makes access control dynamic, explainable, and evolvable—without embedding policy logic into application code.

Entities in This Demo

This demo models the core actors and resources in a modern DevOps control plane:

- Users — human identities (developers, maintainers, approvers)

- Agents — automation identities (GitOps bots, deploy bots)

- Repositories — Git repositories

- Environments — Kubernetes environments (staging, production)

Each entity is modeled explicitly. Trust is expressed only through relationships.

Example Relationship Facts

The following raw relationship facts are stored in OpenFGA as tuples:

user:bobis a contributor ofrepo:frontenduser:aliceis a maintainer ofrepo:frontendagent:gitops-botis an approved_agent forrepo:frontenduser:aliceis a deployer inenvironment:staginguser:bobis a deployer inenvironment:staginguser:aliceis an approver inenvironment:productionagent:deploy-botis an approved_agent forenvironment:stagingagent:deploy-botis an approved_agent forenvironment:production

These relationships are simple, declarative facts.

They contain no business logic, no conditionals, and no environment-specific rules.

Deriving Permissions from Relationships

The authorization model derives action-level permissions from these relationships, such as:

user_can_create_pruser_can_merge_mainuser_can_deploy_staginguser_can_deploy_prodagent_can_act

Crucially, OpenFGA does not encode "user + agent" logic inside a single permission.

Instead:

- User permissions answer:"Is this human identity allowed to perform this action?"

- Agent permissions answer:"Is this automation explicitly trusted to act here?"

The gateway or control plane (GitOps controller, deployment service, API gateway) combines these answers to make the final authorization decision.

This separation keeps the model correct, composable, and easy to reason about.

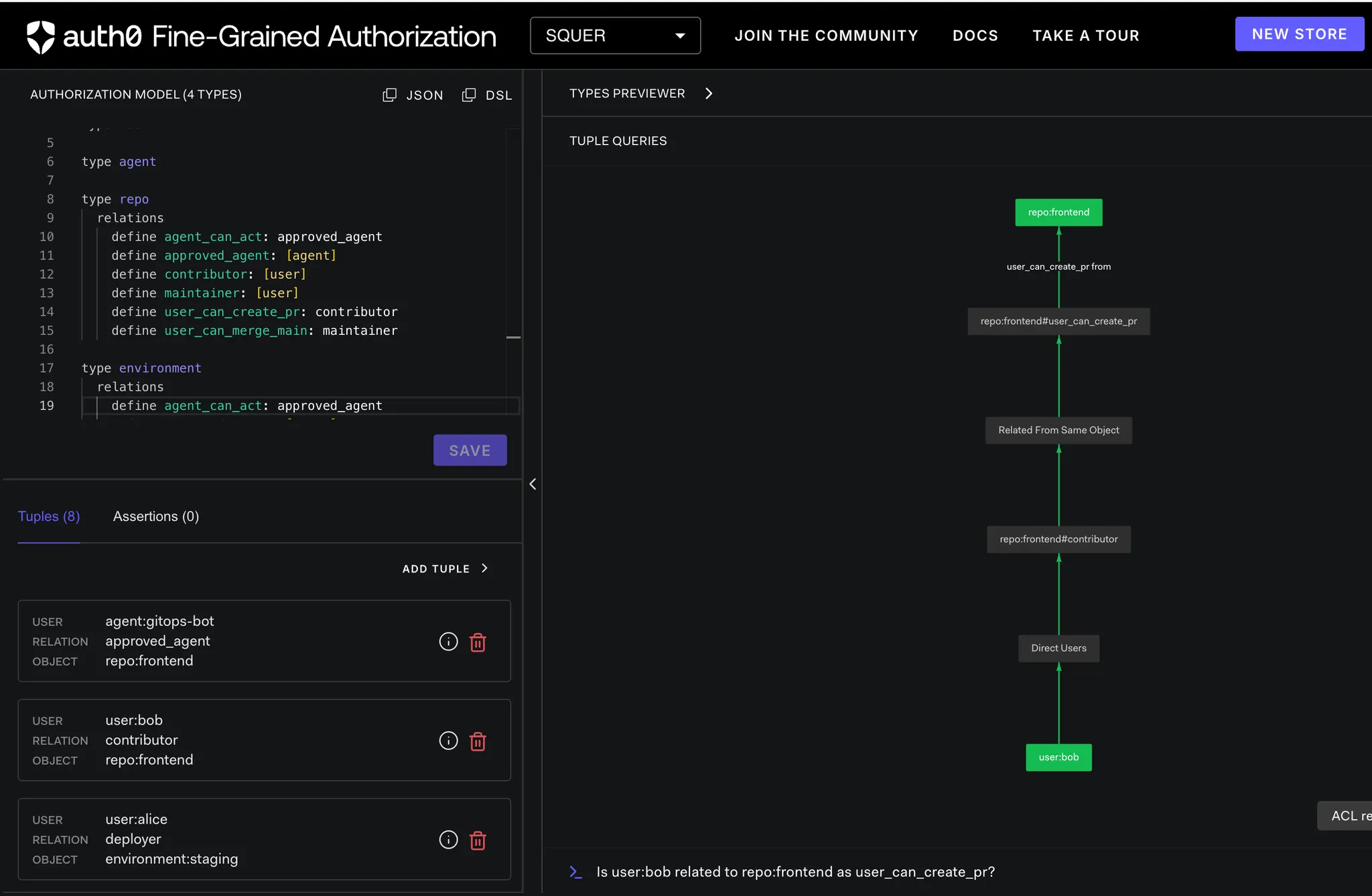

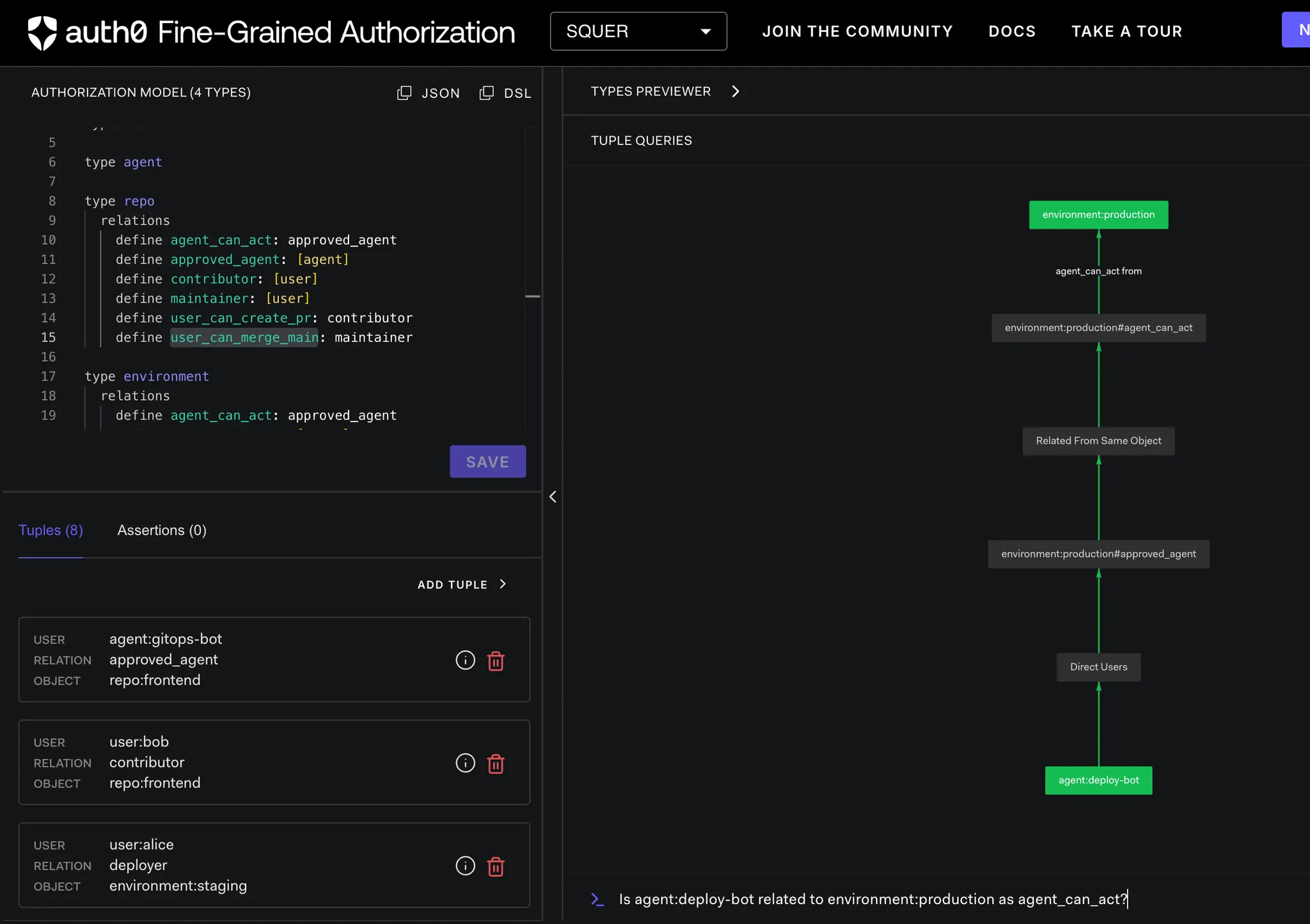

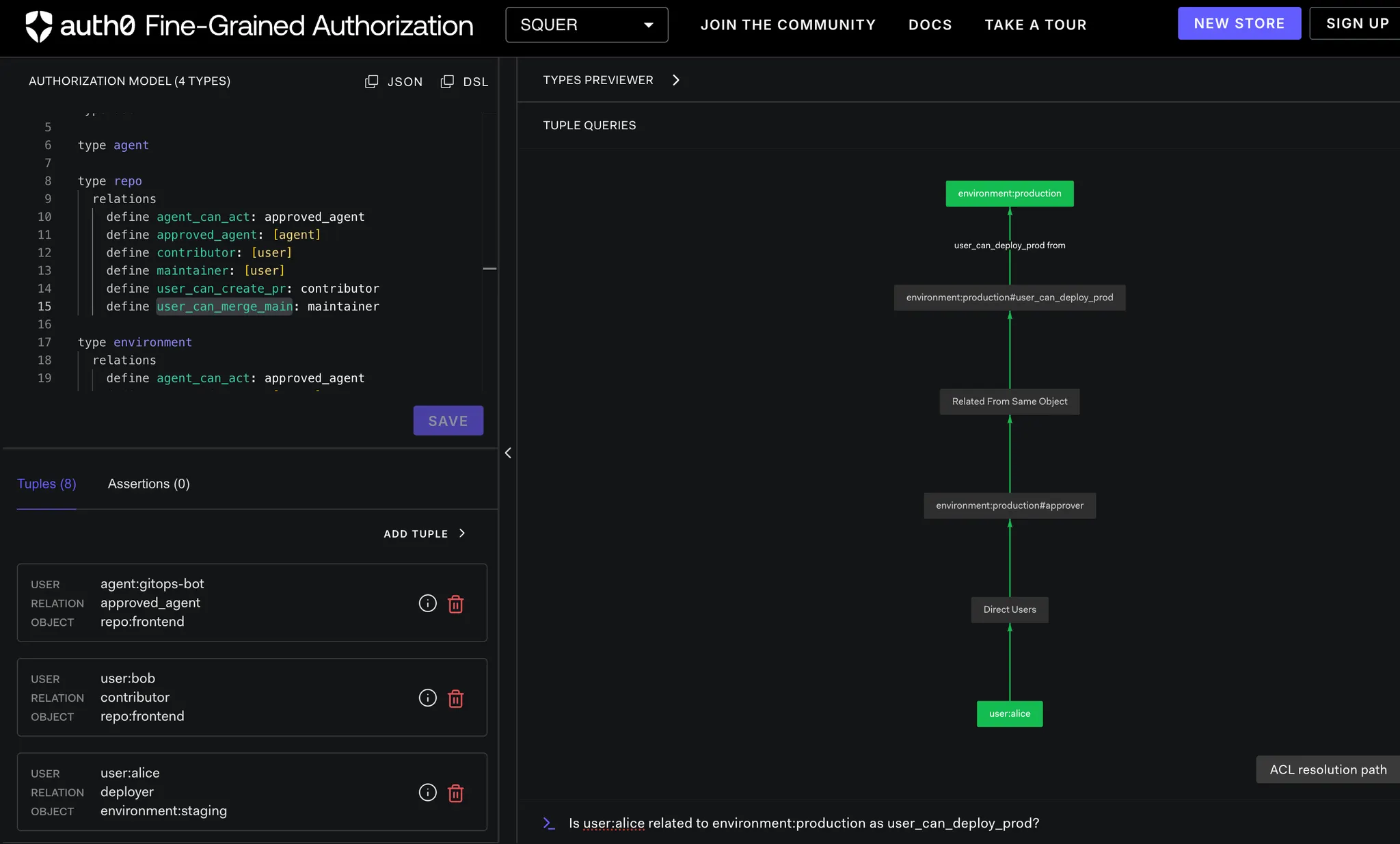

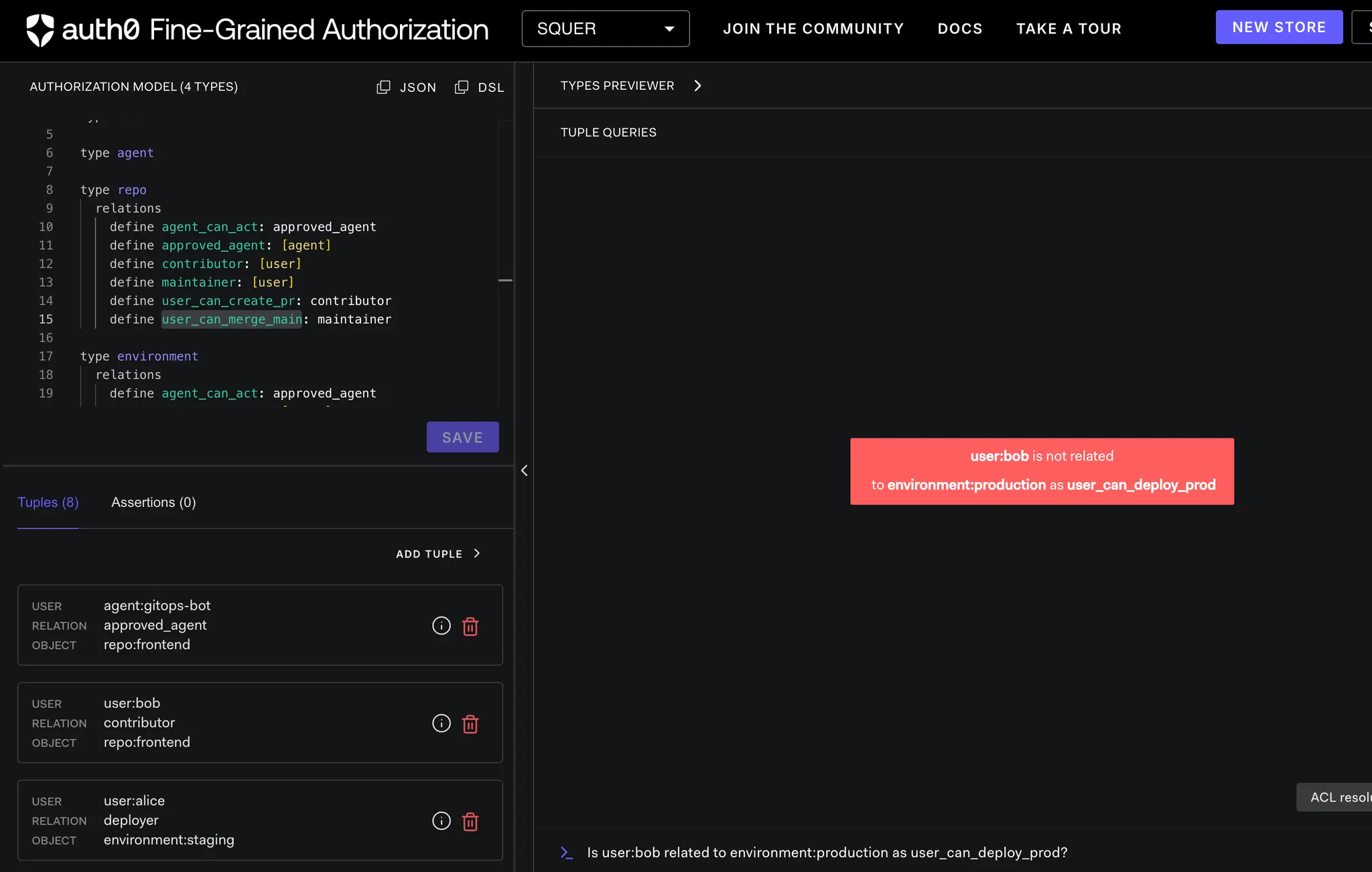

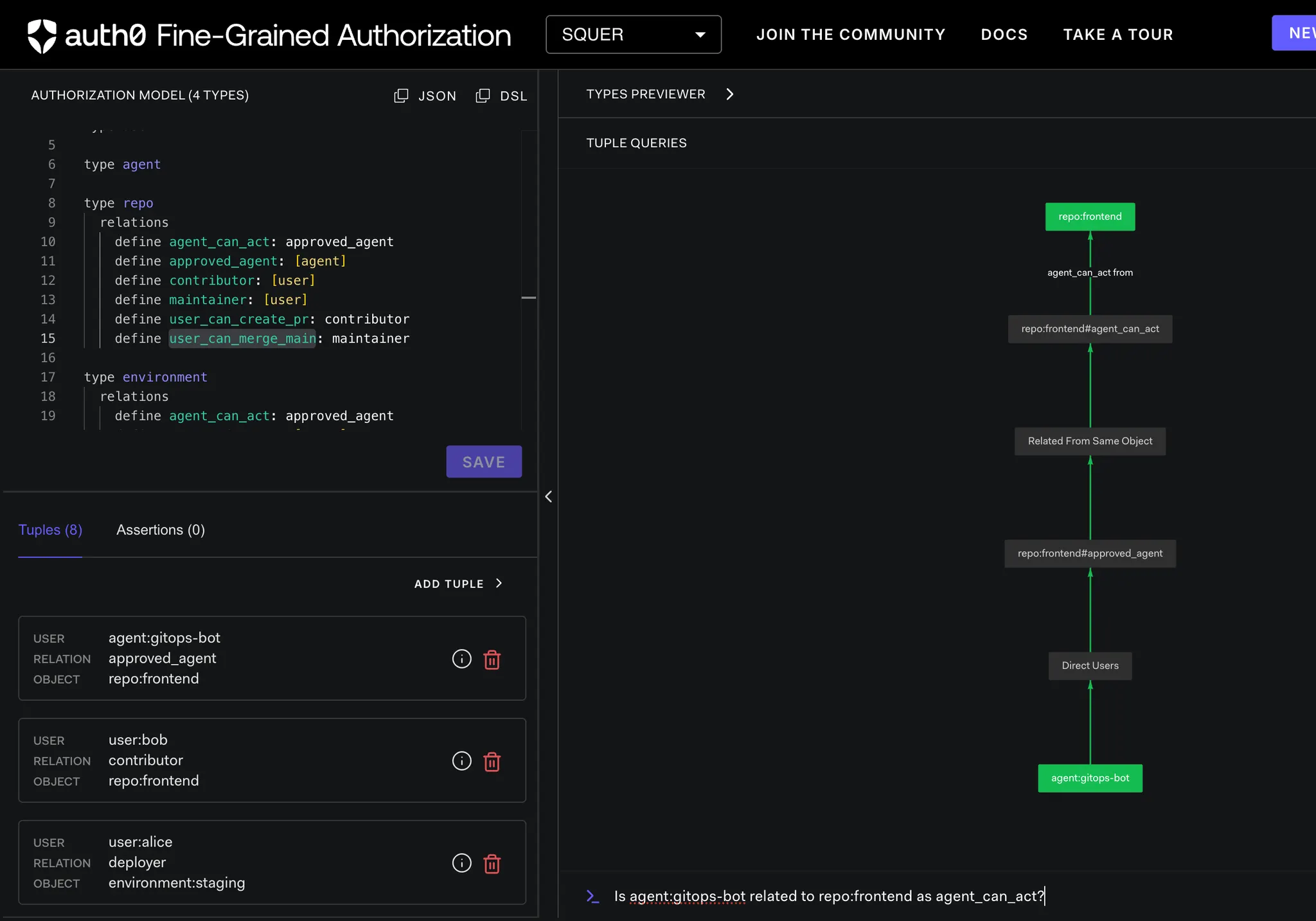

How OpenFGA Evaluates Access

When a request arrives—such as create PR or deploy to staging—the evaluation flow works like this:

- OpenFGA evaluates the relevant relationship tuples

- The authorization model maps those relationships to permissions

- The result is computed dynamically at request time

No permissions are cached in code.

No roles are hardwired into applications.

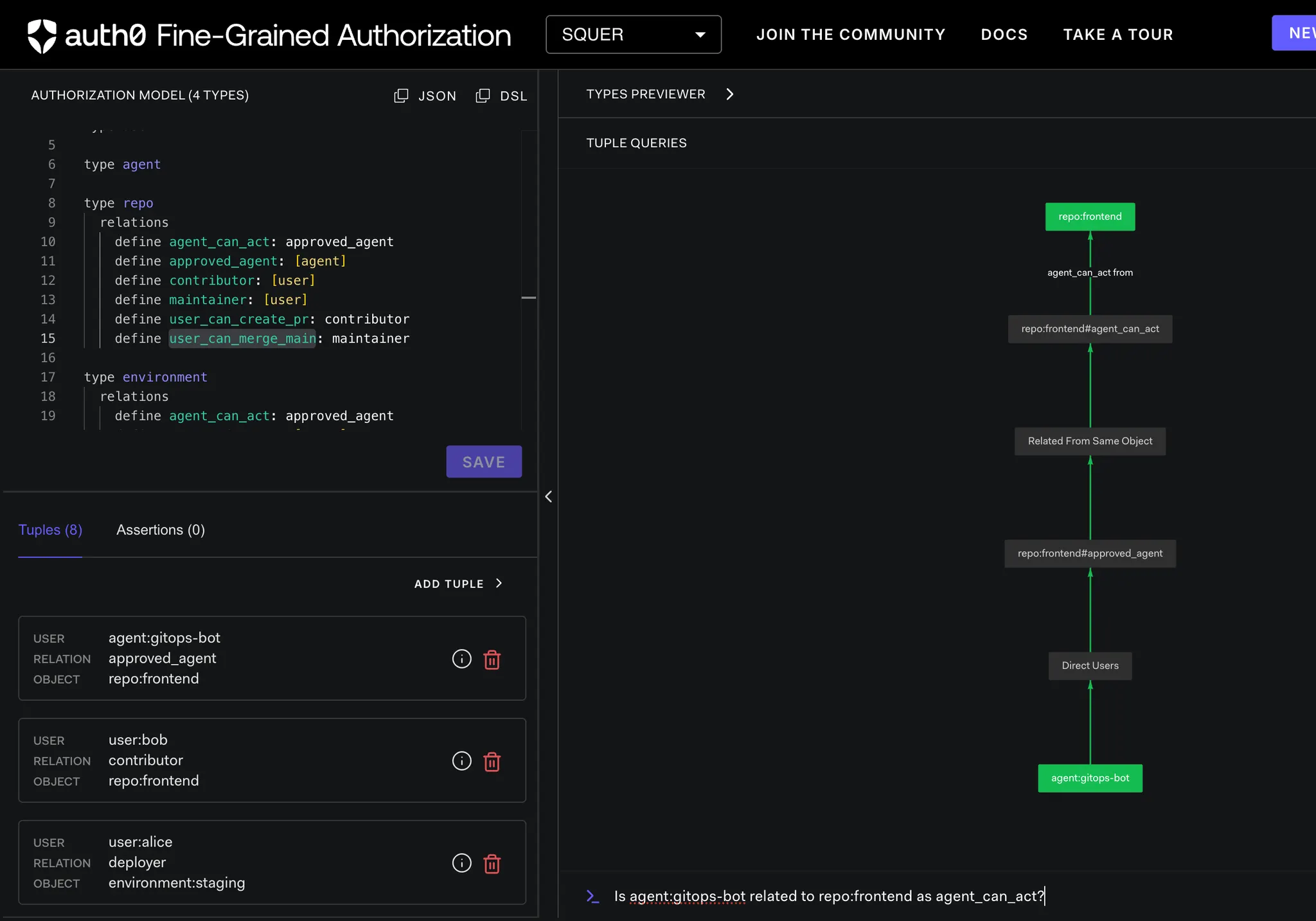

(See the OpenFGA UI visualization below to understand how relationship graphs are evaluated at runtime.)

Sample OpenFGA Authorization Model (Policy) 🧩

Below is a minimal representative model used in this demo. It demonstrates:

- Role relationships on repositories and environments

- Explicit agent approval

- Computed permissions derived from relationships

Note: This snippet is intentionally compact for readability. The full production model is available via SQUER on request.

Example Model (OpenFGA DSL Style)

model

schema 1.1

type user

type agent

type repo

relations

define agent_can_act: approved_agent

define approved_agent: [agent]

define contributor: [user]

define maintainer: [user]

define user_can_create_pr: contributor

define user_can_merge_main: maintainer

type environment

relations

define agent_can_act: approved_agent

define approved_agent: [agent]

define approver: [user]

define deployer: [user]

define user_can_deploy_prod: approver

define user_can_deploy_staging: deployer

Sample Trust Relationships (Tuples) 🔐

Tuples represent the source of truth in OpenFGA. They define who trusts whom, where, and in what capacity.

Repository tuples

user:aliceis a maintainer ofrepo:frontenduser:bobis a contributor ofrepo:frontendagent:gitops-botis approved forrepo:frontend

user:user:alice

relation:maintainer

object:repo:frontend

user:user:bob

relation:contributor

object:repo:frontend

user:agent:gitops-bot

relation:approved_agent

object:repo:frontend

Environment tuples

user:aliceanduser:bobcan deploy to staging (deployer)user:alicecan deploy to production (approver)agent:deploy-botis approved for staging and production

user:user:bob

relation:deployer

object:environment:staging

user:user:alice

relation:deployer

object:environment:staging

user:user:alice

relation:approver

object:environment:production

user:agent:deploy-bot

relation:approved_agent

object:environment:staging

user:agent:deploy-bot

relation:approved_agent

object:environment:production

Why This Model Works in Practice

This relationship-based approach mirrors how real DevOps platforms operate:

- Humans and automation are separate identities

- Trust is explicit and scoped

- Policies live as data, not code

- Authorization decisions are explainable and auditable

Most importantly, it prevents:

- Bots acting without approval

- Production changes without human authority

- Silent privilege escalation

- Hard-coded trust paths hidden in scripts

OpenFGA turns authorization into a transparent control plane—not an afterthought.

DevOps Scenarios (What the Demo Proves)

All scenarios below run against a real OpenFGA service deployed inside Kubernetes, using an actual authorization model and real trust relationships.

In this demo, AI agents never act directly against GitHub, CI systems, or Kubernetes APIs.

Instead, every operation follows a controlled execution path:

AI Agent → Agent Gateway → OpenFGA → GitHub / CI / Kubernetes

The Agent Gateway enforces policy at every step:

- Receives requests from the AI agent

- Validates identity and request context

- Asks OpenFGA whether the action is allowed

- Executes the action only if OpenFGA returns an allow decision

This ensures all DevOps actions—PR creation, merges, deployments—are governed by centralized, relationship-based policy, not hardcoded logic or implicit bot permissions.

Pods running in Demo Cluster (OpenFGA + Agent Gateway)

The demo cluster runs these core components:

- OpenFGA — the relationship-based authorization engine

- Agent Gateway — the centralized control plane for all agent-driven actions

Together, they enforce policy consistently across GitOps, CI, Kubernetes, and ChatOps workflows.

kubectl get pods -n demo-system

NAME READY STATUS

agent-gateway-79f94f947c 1/1 Running 0 (listen: 8082)

ai-agent-567ff7f975 1/1 Running 0

openfga-66b6467446 1/1 Running 0 (listen: 8080)

All calls now go through the agent-gateway endpoints, which internally call OpenFGA. This provides:

- Consistent API — Single entry point for all authorization decisions

- Business Logic — Gateway handles the "user + agent" authorization pattern

- Real OpenFGA Integration — No hardcoded responses; all decisions come from OpenFGA

Scenario 1: GitOps—PR Creation vs. Merge Protection

Goal

- Allow automated PR creation

- Prevent unauthorized merges to

main

Policy Intent

- PR creation requires:

- User is a contributor

- GitOps agent is explicitly approved

- Merge to

mainadditionally requires:- User is a maintainer

- User is a maintainer

Tuple-Level Questions (Conceptual)

- Is user:bob related to repo:frontend as user_can_create_pr?

- Is agent:gitops-bot related to repo:frontend as agent_can_act?

OpenFGA Checks (PR Creation)

Check 1: User Permission

OpenFGA-ai-agent-control ➜ curl -s "http://localhost:8082/after/create-pr?user=bob" | jq

{

"agent_check": {

"allowed": true,

"authorization_engine": "OpenFGA",

"object": "repo:frontend",

"relation": "agent_can_act",

"store_id": "01KERMXB5D1WDF7PA4B6FBNATZ",

"user": "agent:gitops-bot"

},

"allowed": true,

"authorization": "OpenFGA Policy Engine",

"explanation": "User must be contributor AND agent must be approved",

"scenario": "create-pr",

"user_check": {

"allowed": true,

"authorization_engine": "OpenFGA",

"object": "repo:frontend",

"relation": "user_can_create_pr",

"store_id": "01KERMXB5D1WDF7PA4B6FBNATZ",

"user": "user:bob"

}

}

Result

{"allowed":true}

Check 2: Agent Approval

OpenFGA-ai-agent-control ➜ curl -s "http://localhost:8082/check?user=agent:gitops-bot&relation=agent_can_act&object_type=repo&object_id=frontend" | jq

{

"allowed": true,

"authorization_engine": "OpenFGA",

"object": "repo:frontend",

"relation": "agent_can_act",

"store_id": "01KERMXB5D1WDF7PA4B6FBNATZ",

"user": "agent:gitops-bot"

}

Result

{"allowed":true}

Gateway Decision

ALLOW_PR_CREATE = user_can_create_pr AND agent_can_act

✅ PR creation allowed

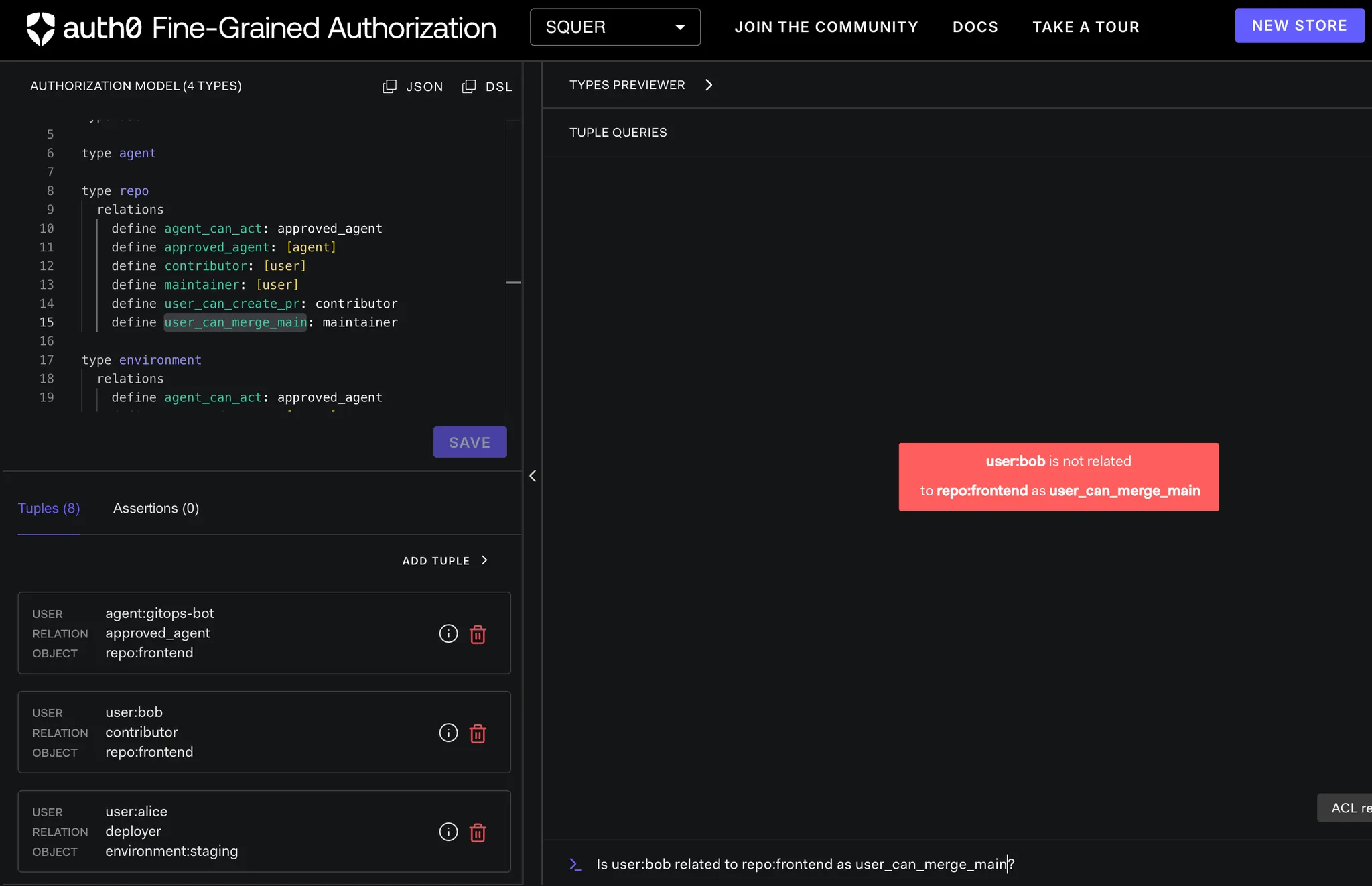

Merge Attempt (Same User)

Check 3: Maintainer Requirement

OpenFGA-ai-agent-control ➜ curl -s "http://localhost:8082/after/merge-main?user=bob" | jq

{

"agent_check": {

"allowed": true,

"authorization_engine": "OpenFGA",

"object": "repo:frontend",

"relation": "agent_can_act",

"store_id": "01KERMXB5D1WDF7PA4B6FBNATZ",

"user": "agent:gitops-bot"

},

"allowed": false,

"authorization": "OpenFGA Policy Engine",

"explanation": "User must be maintainer AND agent must be approved",

"scenario": "merge-main",

"user_check": {

"allowed": false,

"authorization_engine": "OpenFGA",

"object": "repo:frontend",

"relation": "user_can_merge_main",

"store_id": "01KERMXB5D1WDF7PA4B6FBNATZ",

"user": "user:bob"

}

}

Result

{"allowed":true}

Final Decision

❌ Merge denied

This mirrors real Git platforms: contributors can open PRs, but only maintainers can merge.

Scenario 2: Kubernetes Deployment Governance

Goal

- Enable fast staging deployments

- Protect production deployments

Staging Deployment (Bob)

Tuple Questions

- Is user:bob related to environment:staging as user_can_deploy?

- Is agent:deploy-bot related to environment:staging as agent_can_act?

Check 1: User Staging Deploy Permission

OpenFGA-ai-agent-control ➜ curl -s "http://localhost:8082/after/deploy-staging?user=bob" | jq

{

"agent_check": {

"allowed": true,

"authorization_engine": "OpenFGA",

"object": "environment:staging",

"relation": "agent_can_act",

"store_id": "01KERPA2JH98WECB82ENRZ57NY",

"user": "agent:deploy-bot"

},

"allowed": true,

"authorization": "OpenFGA Policy Engine",

"explanation": "User must be deployer AND agent must be approved",

"scenario": "deploy-staging",

"user_check": {

"allowed": true,

"authorization_engine": "OpenFGA",

"object": "environment:staging",

"relation": "user_can_deploy_staging",

"store_id": "01KERPA2JH98WECB82ENRZ57NY",

"user": "user:bob"

}

}

Result

{"allowed":true}

Check 2: Agent Approval

OpenFGA-ai-agent-control ➜ curl -s "http://localhost:8082/check?user=agent:deploy-bot&relation=agent_can_act&object_type=environment&object_id=staging" | jq

{

"allowed": true,

"authorization_engine": "OpenFGA",

"object": "environment:staging",

"relation": "agent_can_act",

"store_id": "01KERPA2JH98WECB82ENRZ57NY",

"user": "agent:deploy-bot"

}

Result

{"allowed":true}

Decision

✅ Bob can deploy to staging

Production Deployment (Alice)

Tuple Questions

- Is user:alice related to environment:production as user_can_deploy_prod?

- Is agent:deploy-bot related to environment:production as agent_can_act?

Check 3: Production Approval

OpenFGA-ai-agent-control ➜ curl -s "http://localhost:8082/after/deploy-production?user=alice" | jq

{

"agent_check": {

"allowed": true,

"authorization_engine": "OpenFGA",

"object": "environment:production",

"relation": "agent_can_act",

"store_id": "01KERPA2JH98WECB82ENRZ57NY",

"user": "agent:deploy-bot"

},

"allowed": true,

"authorization": "OpenFGA Policy Engine",

"explanation": "User must be approver AND agent must be approved",

"scenario": "deploy-production",

"user_check": {

"allowed": true,

"authorization_engine": "OpenFGA",

"object": "environment:production",

"relation": "user_can_deploy_prod",

"store_id": "01KERPA2JH98WECB82ENRZ57NY",

"user": "user:alice"

}

}

OpenFGA-ai-agent-control ➜ curl -s "http://localhost:8082/after/deploy-production?user=bob" | jq

{

"agent_check": {

"allowed": true,

"authorization_engine": "OpenFGA",

"object": "environment:production",

"relation": "agent_can_act",

"store_id": "01KERPA2JH98WECB82ENRZ57NY",

"user": "agent:deploy-bot"

},

"allowed": false,

"authorization": "OpenFGA Policy Engine",

"explanation": "User must be approver AND agent must be approved",

"scenario": "deploy-production",

"user_check": {

"allowed": false,

"authorization_engine": "OpenFGA",

"object": "environment:production",

"relation": "user_can_deploy_prod",

"store_id": "01KERPA2JH98WECB82ENRZ57NY",

"user": "user:bob"

}

}

Result

{"allowed":true} -> Alice

{"allowed":false} -> Bob

Decision

✅ Production deploy allowed for Alice

❌ Denied for Bob

This enforces real-world promotion rules.

Scenario 3: No Implicit Trust for Agents

Goal

Being a bot isn't enough. Explicit approval is required.

Approved Agent

- Is agent:gitops-bot related to repo:frontend as agent_can_act?

OpenFGA-ai-agent-control ➜ curl -s "http://localhost:8082/check?user=agent:gitops-bot&relation=agent_can_act&object_type=repo&object_id=frontend" | jq

{

"allowed": true,

"authorization_engine": "OpenFGA",

"object": "repo:frontend",

"relation": "agent_can_act",

"store_id": "01KERPA2JH98WECB82ENRZ57NY",

"user": "agent:gitops-bot"

}

Result

{"allowed":true}

Unapproved Agent

- Is agent:random-bot related to repo:frontend as agent_can_act?

OpenFGA-ai-agent-control ➜ curl -s "http://localhost:8082/check?user=agent:random-bot&relation=agent_can_act&object_type=repo&object_id=frontend" | jq

{

"allowed": false,

"authorization_engine": "OpenFGA",

"object": "repo:frontend",

"relation": "agent_can_act",

"store_id": "01KERPA2JH98WECB82ENRZ57NY",

"user": "agent:random-bot"

}

Result

{"allowed":false}

Why This Matters in Real Platforms

This isn't about introducing OpenFGA for fun—it's a DevOps control-plane pattern.

Here's what changes:

- Authorization logic moves out of scripts

- Policies become data, not code

- Agent power becomes scoped, not implicit

- Every decision is explainable:

- who

- what

- where

- why

It prevents:

- Bots merging to main

- Bots deploying to production without approval

- Silent privilege escalation

- Untraceable automation actions

Disclaimer

This hands-on demo shows how authorization decisions work in real DevOps and AI agent workflows.

A production deployment would also include:

- Workload identity (OIDC)

- GitHub App authentication

- Persistent OpenFGA datastore

- Audit streaming and policy governance

The core pattern, however, remains the same.

Final Takeaway

Tokens authenticate.

OpenFGA authorizes.

As AI agents become first-class actors in DevOps platforms, authorization must shift from:

"Does this token work?"

to:

"Is this action allowed by policy, for this relationship, right now?"

OpenFGA provides the missing authorization layer to make that shift safe, scalable, and auditable.

Want the Full Demo and Production-Ready Setup?

This blog focuses on concepts, architecture, and real execution paths—showing how OpenFGA enables safe, policy-driven AI agents in Kubernetes and DevOps workflows.

If you'd like access to the complete working setup, including:

- Production-grade Kubernetes manifests

- A hardened Agent Gateway with real OpenFGA enforcement

- Reusable authorization models and trust patterns

- End-to-end GitOps + CI/CD + Kubernetes scenarios

- Hands-on internal demos or enablement workshops

—we'd be happy to collaborate.

📬 Get in Touch

- 📨 Ankit Asthana—ankit.asthana@squer.io

- 📨 Tom Graupner—tom.graupner@squer.io

Let's build AI-powered DevOps platforms that are autonomous, auditable, and policy-controlled by design.