Kubernetes is powerful—but debugging it can feel like a dark art. Even for seasoned DevOps engineers, diagnosing issues across Pods, Services, and YAML configurations often involves tedious log scraping, manual correlation, and hours of frustration. Monitoring tools flood your dashboards with metrics but rarely explain what went wrong or how to fix it. That’s where K8sGPT comes in. This CNCF Sandbox project pairs rule-based scanning with Generative AI to turn low-level Kubernetes errors into human-readable insights. From root cause analysis to policy compliance and developer onboarding, K8sGPT empowers teams to move faster, resolve incidents more confidently, and reduce cognitive overhead. In this article, you’ll learn how to run K8sGPT in your environment, use it with or without AI backends, and embed it into real-world SRE workflows to elevate your observability game.

🧠 Introduction: Why Kubernetes Needs AI Today

Kubernetes is powerful—but troubleshooting it is notoriously painful. Even seasoned DevOps engineers and SREs often find themselves sifting through cryptic logs, misconfigured YAMLs, and endless dashboards. Despite excellent monitoring tools, root cause analysis remains slow and manual.

Meet K8sGPT: An open-source diagnostic powerhouse that pairs rule-based analysis with Generative AI to explain Kubernetes issues in plain English—fast, smart, and developer-friendly.

In this blog, you'll learn:

✅ What K8sGPT is and how it works

✅ How to run it locally using Minikube

✅ Benefits of the K8sGPT Operator for real-time insights

✅ Key integrations and privacy features

✅ Why it's becoming essential in modern SRE workflows

Let's explore how K8sGPT transforms your Kubernetes observability game.

🔍 What Is K8sGPT? A Quick Overview

K8sGPT is a Kubernetes diagnostic tool that scans your cluster, detects issues, and translates technical failures into human-readable explanations using AI.

📢 Highlights

- 🌐 Launched at KubeCon Europe 2023

- ✅ Accepted into the CNCF Sandbox (Dec 2023)

- ⭐ 4,000+ GitHub stars and counting

Unlike traditional monitoring solutions that overwhelm you with logs and metrics, K8sGPT interprets the issues and helps you understand what went wrong and how to fix it—functioning like an expert SRE co-pilot.

🧰 How K8sGPT Works: Under the Hood

K8sGPT uses a pluggable analyzer engine that inspects various Kubernetes objects—Pods, Services, PVCs, Network Policies, and more. Here's the high-level workflow:

- Connects to K8s API Server via

kubeconfig - Analyzes resources using built-in or custom analyzers

- Summarizes issues using natural language explanations

- (Optional) Sends results to an AI backend for deeper remediation hints

🔧 You can run it from the CLI or deploy it in-cluster as an Operator for continuous monitoring.

.png)

💻 Hands-On Demo: K8sGPT on Local Minikube

Let’s walk through a real demo of running K8sGPT on your laptop using Minikube

🖥️ Step 1: Start a Cluster

macOS:

brew install minikube

minikube start --cpus=4 --memory=6

Linux (Ubuntu):

curl -LO https://storage.googleapis.com/minikube/releases/latest/minikube-linux-amd64

sudo install minikube-linux-amd64 /usr/local/bin/minikube

minikube start --cpus=4 --memory=6

Windows (PowerShell):

choco install minikube

minikube start --cpus=4 --memory=6g

🔧 Step 2: Install the K8sGPT CLI

K8sGPT offers binaries for major operating systems. Here's how to install it:

🖥️ macOS (via Homebrew)

brew install k8sgptAlternatively, download the binary from the GitHub Releases.

🐧 Linux (Ubuntu/Debian)

curl -LO https://github.com/k8sgpt-ai/k8sgpt/releases/latest/download/k8sgpt_Linux_x86_64.tar.gz

tar -xvzf k8sgpt_Linux_x86_64.tar.gz

sudo mv k8sgpt /usr/local/bin/

Then verify:

k8sgpt version

🪟 Windows (PowerShell)

- Download the latest release:👉 K8sGPT Windows Binary

- Extract the zip and add the path to

k8sgpt.exein your Environment Variables > PATH - Confirm it's installed:

k8sgpt version

🔐 Step 3: Authenticate AI Provider

To use AI explanations, authenticate with your provider:

k8sgpt auth add --backend <provider_name>k8sgpt version

You can choose from:

- OpenAI (GPT-4, GPT-3.5) (Default)

- Cohere

- Azure OpenAI

- Amazon Bedrock

- LocalAI (for self-hosted LLMs)

⚠️ Step 4: Break Your Cluster (On Purpose 😈)

Here’s a bad Ingress manifest with multiple issues:

# manifest/bad-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: broken-ingress

namespace: k8sgpt-demo

spec:

rules:

- host: demo.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: missing-service

port:

number: 80

Apply it:

kubectl create ns k8sgpt-demo

kubectl apply -f manifest/bad-ingress.yaml

🤖 Step 5: Analyze with K8sGPT

k8sgpt analyze --explain

Expected output:

Error: Ingress k8sgpt-demo/broken-ingress

Issues:

- Ingress does not specify an Ingress class.

- References a non-existent service: k8sgpt-demo/missing-service.

Solution: 1. Add a valid Ingress class.

2. Ensure the referenced service name is correct and exists in the namespace.

🛡️ Step 6 (Optional): Anonymize Sensitive Data

k8sgpt analyze --explain --anonymize

This masks object names and labels before sending to the AI provider.

👷 K8sGPT Operator: In-Cluster AI Diagnostics, Declaratively Managed

The K8sGPT Operator enables fully automated, AI-powered diagnostics from inside your Kubernetes cluster. Unlike the CLI tool, the Operator allows you to define custom resources that control how, when, and where diagnostics are performed—with all results published as CRDs.

This enables you to:

- Continuously scan workloads with customizable scope

- Integrate results into GitOps, Slack, or Backstage

- Configure AI models, secrets, and scan options declaratively

- Monitor remote clusters using kubeconfigs

📦 Installation (Helm)

helm repo add k8sgpt https://charts.k8sgpt.ai/

helm repo update

helm install release k8sgpt/k8sgpt-operator -n k8sgpt-operator-system --create-namespace

🔐 Step 1: Create the AI Secret

kubectl create secret generic k8sgpt-sample-secret \

--from-literal=openai-api-key=$OPENAI_TOKEN \

-n k8sgpt-operator-system

🧠 Step 2: Define the K8sGPT Custom Resource

apiVersion: core.k8sgpt.ai/v1alpha1

kind: K8sGPT

metadata:

name: k8sgpt-sample

namespace: k8sgpt-operator-system

spec:

ai:

enabled: true

model: gpt-4o-mini

backend: openai

secret:

name: k8sgpt-sample-secret

key: openai-api-key

noCache: false

repository: ghcr.io/k8sgpt-ai/k8sgpt

version: v0.4.1

kubectl apply -f k8sgpt.yaml

📄 What is the Result CR? Understanding Operator Output

When the Operator detects a cluster issue, it automatically generates a Result CR in the same namespace:

kubectl get results -n k8sgpt-operator-system -o json | jq .

{

"kind": "Result",

"spec": {

"details": "...",

"explanation": "Add the control-plane label to the endpoint..."

}

}

✅ These are AI-powered diagnostics, stored in-cluster and accessible via kubectl, GitOps, or dashboards.

🌐 Monitoring Remote Clusters

The Operator can monitor multiple Kubernetes clusters by referencing remote kubeconfig secrets.

apiVersion: core.k8sgpt.ai/v1alpha1

kind: K8sGPT

metadata:

name: capi-quickstart

namespace: k8sgpt-operator-system

spec:

ai:

anonymized: true

backend: openai

model: gpt-4o-mini

secret:

key: api_key

name: my_openai_secret

kubeconfig:

key: value

name: capi-quickstart-kubeconfig

This keeps credentials and diagnostics isolated per cluster without polluting the remote clusters.

🔖 Labels for Filtering Results

Each Result CR is labeled with:

"labels": {

"k8sgpts.k8sgpt.ai/backend": "openai",

"k8sgpts.k8sgpt.ai/name": "k8sgpt-sample",

"k8sgpts.k8sgpt.ai/namespace": "k8sgpt-operator-system"

}

Use these to route alerts to team-specific Slack channels or GitOps branches.

🔌 Native Integrations: From Dashboards to Security

🔒 Privacy-First: Built for Secure Environments

Worried about sending your cluster data to an external AI provider? K8sGPT has you covered:

- ✅ Anonymization masks sensitive data before sending

- ✅ LocalAI support for air-gapped or regulated environments

- ✅ Custom analyzers for in-house security rules

Use the --anonymize flag or disable AI completely for rule-only scanning.

🧠 Why K8sGPT Beats Traditional Monitoring Tools

📦 Use Cases in Real-World SRE Workflows

K8sGPT goes beyond traditional troubleshooting by integrating with security scanners, policy engines, and custom AI backends. Here's how you can use it in real-world SRE workflows, with enriched examples using Kyverno and custom analyzers.

1. 🔍 Postmortem Triage (Outage RCA)

Scenario: After an outage, you want to understand what went wrong with pods, services, or workloads.

k8sgpt analyze --explain --namespace prod

Sample Output:

Resource: Pod/prod/api-v2

Issue: CrashLoopBackOff

Explanation: Image "api:v2.0" is missing in the registry

Solution: Correct the image tag or upload the missing version.

Ideal for: On-call engineers, SEVs, post-incident analysis

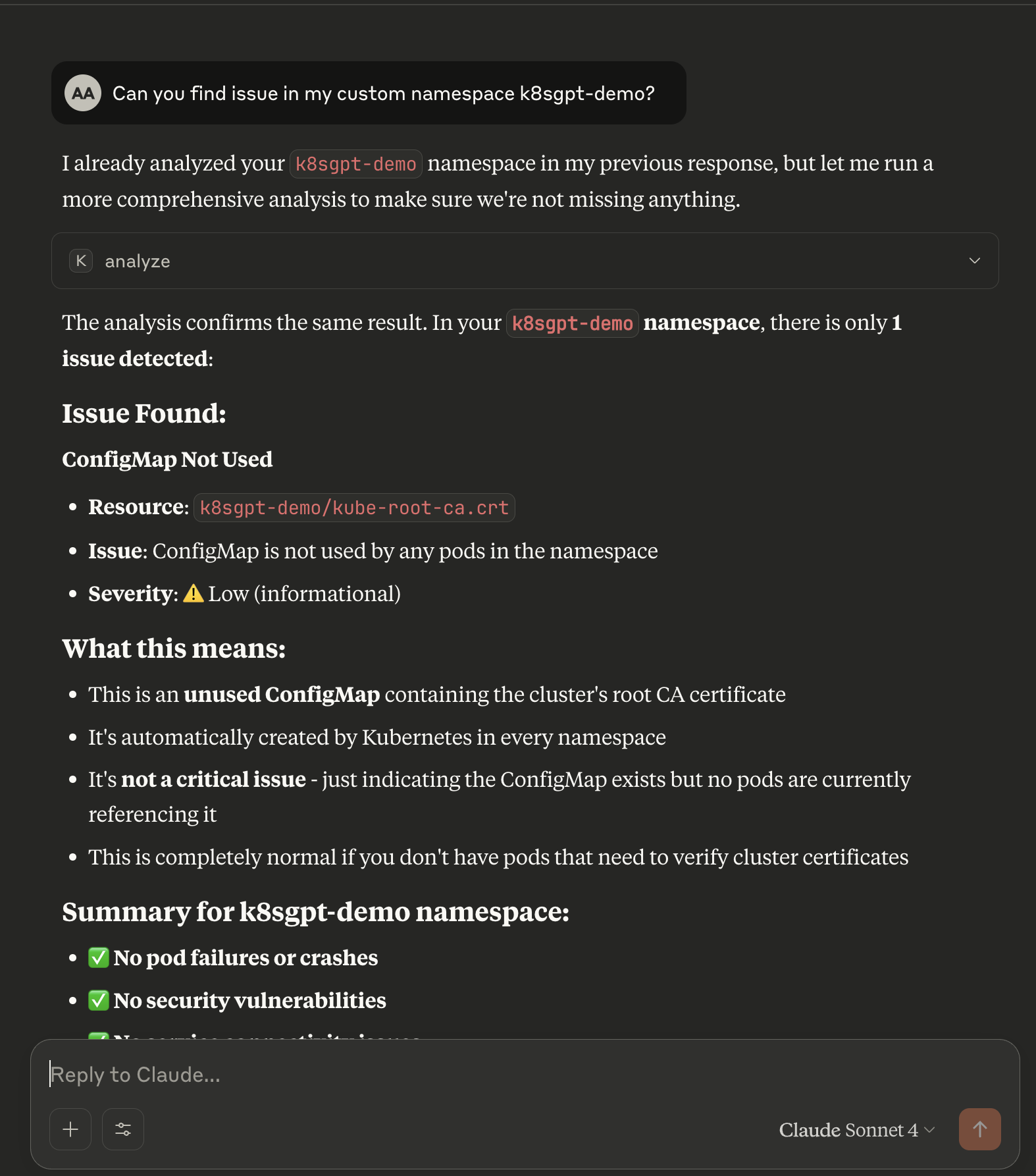

2. 🧠 Real-Time Agentic Analysis via MCP

Scenario: You want an AI agent (e.g., Claude, LangChain, or a custom app) to interact with your Kubernetes cluster for live debugging, policy suggestion, or context-aware recommendations.

Instead of running static k8sgpt analyze commands, you expose K8sGPT via the MCP protocol, allowing bi-directional JSON-based communication over stdin/stdout. This is ideal for building agentic workflows or developer assistants.

🔧 Setup: Start the MCP server:

k8sgpt serve --mcp --backend openai

🧩 Request Example: Your Claude agent (or Python script) sends:

{

"type": "analyze",

"payload": {

"namespace": "default",

"filters": ["Pod", "Deployment"]

}

}

💡 Sample Response (Claude Desktop):

3. 🔧 Policy Compliance (Kyverno + K8sGPT)

Scenario: You want to enforce best practices using Kyverno and explain violations.

Enable Kyverno integration:

k8sgpt integration activate kyverno

Then analyze PolicyReport/ClusterPolicyReport:

k8sgpt analyze --filter ClusterPolicyReport --explain

Sample Output:

Policy Violation: disallow-latest-tag

Resource: Pod/dev-app

Explanation: Container image uses `latest` tag, which is mutable.

Fix: Use a specific version like `myapp:v1.0.3`

Ideal for: Platform teams, compliance audits, policy enforcement

4. 👩💼 Developer Onboarding (Self-Serve Debugging)

Scenario: Junior dev deploys a misconfigured app and gets stuck. Instead of escalating to SRE, they self-debug.

k8sgpt analyze --namespace dev --explain

Sample Output:

Issue: Pod failed to start due to missing imagePullSecret

Fix: Add imagePullSecret referencing private registry credentials.

Ideal for: Reducing Slack interruptions, onboarding engineers faster

5. 🌐 RAG-Enhanced Explanations (Custom REST Backend)

Scenario: You want to enrich K8sGPT explanations using domain-specific documentation (e.g., CNCF FAQs).

Run custom REST-based AI backend (e.g., with Llama3 + Qdrant):

./k8sgpt auth add --backend customrest --baseurl http://localhost:8090/completion --model llama3.1

Run analysis with AI-backed explanations:

k8sgpt analyze --backend customrest --explain

Sample Output:

Error: Prometheus scrape config fails

RAG Response: Based on CNCF best practices, invalid relabeling with 'keeps' should be 'keep'. See: prometheus.io/docs...

Ideal for: AI agents, RAG pipelines, domain-aware remediation suggestions

Deployment uses image from a private registry but lacks imagePullSecret.

🚀 What’s Coming Next?

🔮 K8sGPT’s roadmap includes:

- Interactive CLI chat interface

- Auto-remediation hooks with tools like Karpenter

- Deeper integrations with ArgoCD, Flux

- Support for HuggingFace and Mistral LLMs

📈 SEO Optimized Summary (TL;DR)

- K8sGPT is a CNCF Sandbox project that uses AI to simplify Kubernetes troubleshooting.

- It runs as a CLI or Operator and explains issues in plain English.

- Works with OpenAI, Azure, Bedrock, Cohere, or self-hosted LLMs.

- A must-have tool for modern SREs, DevOps teams, and platform engineers.

✅ Easy to install

✅ Safe for production

✅ Boosts productivity and observability instantly

📚 Resources & Links

🙌 Wrap Up: Ready to Debug Smarter?

With K8sGPT, Kubernetes debugging moves from chaotic log scraping to calm, AI-guided clarity. Whether you're running a homelab with Minikube or managing enterprise-grade clusters, this tool is a worthy addition to your DevOps arsenal.

👉 Try it today and experience Kubernetes observability reimagined.

🤝 Let’s Connect — Bring K8sGPT and AI-Powered Kubernetes to Your Team

At SQUER, we’re passionate about empowering teams to adopt practical AI solutions that make real impact in cloud-native environments. Whether you're just getting started with Kubernetes, looking to integrate AI into your platform workflows, or interested in running a live K8sGPT demo or workshop with your team—we’d love to help.

📬 Have questions or want to dive deeper?

Reach out us directly or connect with us at squer.io to explore:

📨 Ankit Asthana — ankit.asthana@squer.io

📨 Tom Graupner —tom.graupner@squer.io

- Custom workshops or internal enablement sessions

- Platform and SRE maturity assessments

- Secure GenAI and LLM adoption strategies

- End-to-end K8sGPT setup in your environment

Let’s unlock the next level of Kubernetes insight—together.