The rise of Agentic AI signals a shift from passive language models to autonomous, goal-driven systems. Building on the success of ChatGPT, agentic systems combine reasoning, tool usage, and adaptability to execute complex tasks with minimal human input. This article examines what defines Agentic AI, where it is already making an impact, and why it is likely here to stay.

ChatGPT was released at the end of 2022. Though access to GPT-3 was given way before, the typical user was not interested in fiddling with prompts and parameters. However, the results back then were impressive enough to keep researchers busy finding such a model's limitations.With ChatGPT released, the world changed. Ignoring the chatbot that can solve several tasks for you and has a solid free tier was almost impossible. In the beginning, ChatGPT was just a GPT-3 trained on following instructions (InstructGPT), which was replaced by other models later on. Just to have a basic text box to tell the model what you want to do was easy enough for every user to get used to. Similar to a well-known search engine, right? 😉

.png)

The reason for the success? Easy access and excellent user experience. Though the UI for GPT-3 was relatively easy, you had to learn about a few parameters to get better results. ChatGPT was just writing in natural language. Everything else was preconfigured and automated for the user.Tools like LangChain were used very quickly to get new/enriched results, e.g., by making Google searches in the background. But those tools were limited, and though they flourished in the garden of use cases for ChatGPT and other LLMs, they were ultimately trivial tools. Agentic AI is the next logical step in making those tools smarter.IBM is calling Agentic AI the top technology trend for 2025. A look at the schedule of NeurIPS 2024 quickly reveals how important this topic is in research at the moment. Recently, OpenAI published a platform to set up different agents for versatile tasks. Agentic AI will last, but how will you notice it?

What is Agentic AI?

According to IBM Agentic AI has five key features:

Autonomy:

Agentic systems can execute long-term tasks without constant human intervention.

Proactivity/Problem Solving:

Usually, LLMs can't reach out to external tools like search engines or databases on their own. Agentic systems can detect the nuances and context of a task to create a deterministic strategy and actively include required tools in their execution. The LLM is used as an orchestrator to include the right tools to overcome obstacles or improve output.

Interactivity:

Given by the proactive nature of agentic systems, they can gather data from the outside environment and adjust their behaviour in real-time.

Adaptable, yet specialised:

An agentic system is not prone to execute a fixed set of steps, though it can be set up for a specialised task. Imagine you want to check the temperature for your town on websites of different weather stations, but one website has a relaunch. A classical bot would ned to be adjusted to crawl the data correctly. Agentic AIs would try to adapt to the new situation.

Intuitive:

Instead of using a complex software interface, the user can directly interact using just natural language, which enhances productivity.

It's also possible to combine multiple AI agents by aligning them into a pipeline, aggregate the results from a fleet of agents, or control their behaviour "in the flight." A typical workflow of a single agent can look like that depicted below. However, the number of steps within an agent's workflow is not limited and also involves other agents.

.png)

Once again, we have a powerful tool at our disposal. Does it work perfectly? No, but most platforms use active learning to improve their bots. They now have some issues that a normal human would easily overcome, so human intervention is still required. This is a good opportunity to recall Moravec's paradox, which states that it is hard to teach an AI tasks that humans can do easily (walking without colliding somewhere, recognising a familiar face in low light).On the other hand, complex tasks like playing chess or reasoning can be easily learned by an AI. So there is room for optimization, and the problems are not solved that easily, but I don’t doubt that we will have a couple of usable bots on different platforms sooner or later. The competition is high. In March 2025, Amazon released Nova Act and outperformed OpenAI's and Claude's bots.

The more important question: How will operators shape the future? I am not a fortuneteller, but let's think a few steps ahead and see, how it goes.

UI-Automation Becomes a Low-Hanging Fruit and Will Shape the Web

Not just recently, ticket sales became flawed by a mass of ticket bots, which try to gather tickets within minutes, and sometimes they are even automatically sold for a much higher price. Some people seek a solution in programming their own bots to gather tickets. This is not a new development. But with functional agentic AI, everyone will have access to bots and might achieve reasonable results. This could lead to a red-vs-blue scenario, in which websites will try to protect their shop/site from bots, like it's happening already. However, bots are already passing by captchas, and it is a hard task to recognize a human web user. So in the long term, there must be other solutions.

Maybe not for ticket sale websites, but it is not unlikely that providers will optimise their websites for bots. They don't need all the design assets, but they need the navigation to the information. If you can't fight them, join them, right?A standard for a dedicated bot-optimised website view could be a win-win for website providers and agent AI agents. It would not be like robots.txt files, but like a switch on a different website style, like https://domain.tld/?plain=true. The provider could save traffic, and the AI agents might gain a clear view. However, it's very likely that accessibility standards like ARIA will rise even more since they add semantic information. Of course, an agent can learn to interact with an API, which it has never seen before, but since websites are more optimized in leading the correct information or providing certain functionality, it promises a faster reward for a bot.Writing a crawler was a solid task in the past, but having a reliable website scraper was hard, especially with the website's design changing.

Usually libraries like BeautifulSoup or Playwright support you in such tasks, but they both require more or less a stable website markup. You change the ID or class of an label? That might force you to adapt you scripts/tests as well… yet..

AI-Driven Testing

Testing is required and not for free. Behaviour-driven development is used in software projects to understand the correct functionality of software. Usually, the technical setup is done by something like Cucumber/Gherkin, and it takes time to get it up and running, since the natural language parts need to be connected to your code base. We already have natural language as a test specification, so the obvious choice is to observe how an AI agent is going to navigate it. Some developers are already using this approach to test their locally developed features. The ramp-up time for a test environment could dramatically improve, but only if the AI agents act deterministically!An interesting side effect might be improved website usability: Some rules in the web are unspoken standards, like the design of search, navigation, responsive breakpoints, device optimization, etc. We can observe those standards over many websites, and an AI agent is learning them implicitly. This could lead to an overall improvement in the usability of web app developers using AI agents for testing. On the other hand, if there is a shift of paradigms (e.g., augmentic UI), it might take some time for an AI agent to catch up.

Platform Security

Back to the ticket sale. You have an AI agent trained to fetch you a ticket for your favorite band. What does it need to buy the tickets? Payment Credentials, such as a PayPal login or your credit card number. Essentially, there are AI agents on the Web who are willing to share such data if they are convinced they will buy a ticket. And that's where it gets tricky.Some scam shops are already so well done that it's very hard to distinguish them from the original. I was almost victim to a scam shop of Scarpa last year. The domain looked solid (scarpa-outlet-online.shop), the certificate was good, and the imprint was good, but the prices were so low that I became suspicious. Luckily, I did not enter any data, but a few friends of mine did. So with more AI agents on the rise, it's obvious they will be targeted by scammers as well.But it's not about securing sensitive data the AI might give away, but also to avoid or interrupt unintended actions. As far as I know, with AI Agents on the web, we have basically the first time talking about security and safety in an entirely digital realm.

Vendor Dominance

With open-source equivalents already there, there could be an operating cost price battle, which the most cost-efficient platform owner might win. This might have a negative impact on further FOSS development in that field and increase the likelihood of vendor lock-in.

Almost a year ago, we already had a reinforcement-driven bot for software engineering, though the real-world usage was limited to green-field apps and simple web apps. What is especially hard for language models is the intuition of an architect (deep knowledge). They can gather and abstract rules, but applying them in an unseen codebase/scenario with appropriate prioritization worked better with reinforcement learning in the past. We also had active learning for LLMs. Now we are combining those approaches in agents, so technically speaking, an agent could train to become a good software architect to modernise legacy software. This could significantly impact the software industry, as we can now use software quality metrics to quantify the effectiveness of individual refactoring efforts.Another topic is orchestrating a large fleet of bots. Crew AI is a well-known candidate here (and also open source ❤️), but other players like W&B are also trying to offer solutions.

So not only are platforms like OpenAI, AWS, and Anthropic in competition, but the tool chains and frameworks might also change over time. It's unclear if the market will converge to a de facto standard (platform). Due to the nature of chatbots, different ecosystems could also make use of each other.

Why will it last?

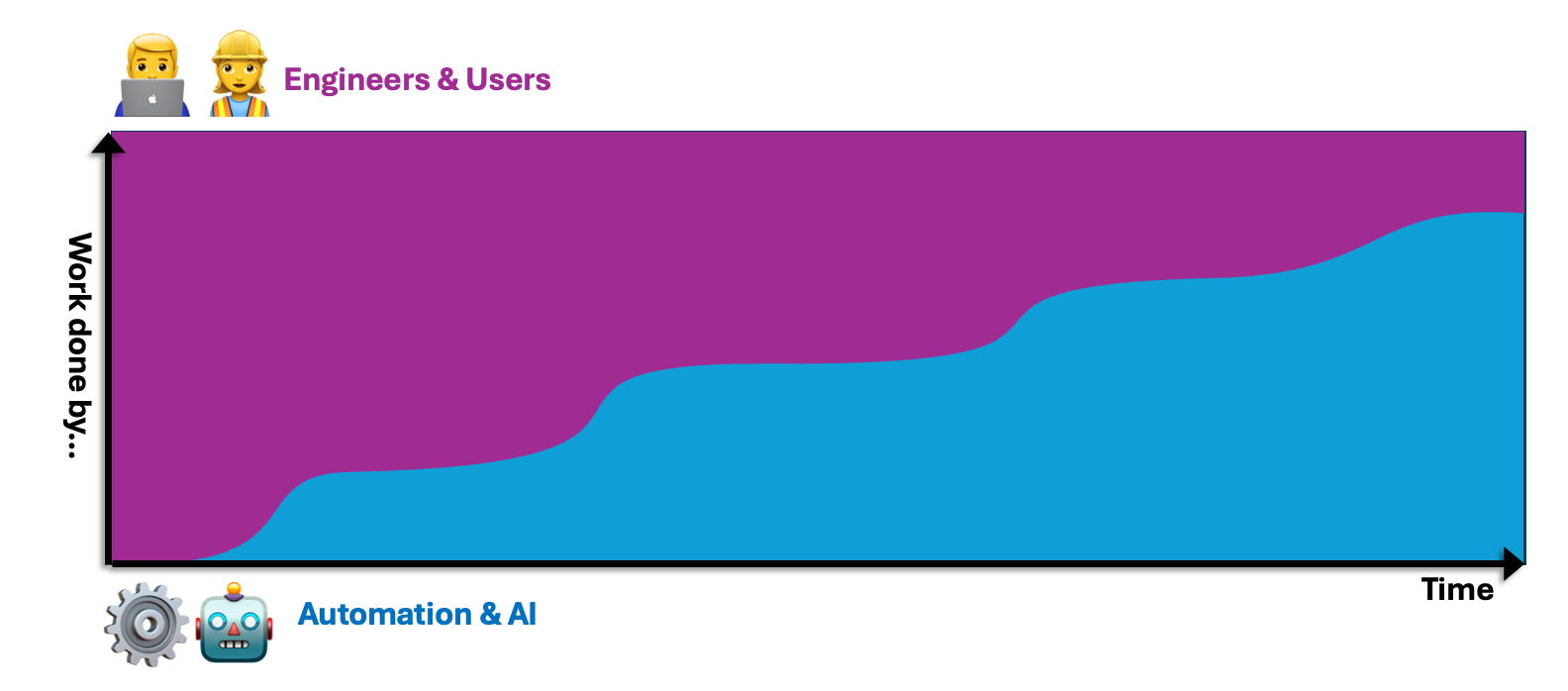

Most people adopt new behaviours for convenient reasons - sometimes also if it's not in their favor. Looking at the past, we did this by automation and abstraction. The recent "episodes" in the field of software engineering describe this pretty well. Extreme Programming with OOP (abstraction), CI/CD (automation), containerization (abstraction and automation), rise of hyper-scalers (abstraction and automation), natural language-based AI interaction (abstraction), agentic systems (abstraction and automation). From my gut feelings, this happens in waves as depicted below. Undoubtedly, agentic systems will become a daily driver, and sometimes we won't even notice we are using them.

The key question is: Will vendors and researchers manage to create reliable, safe, and deterministic solutions? Like I said in the beginning, I am not a fortuneteller. So let's see where this journey will lead us 😎

Let’s build the future together!

If you’re curious how Agentic AI could impact your business, your product, or even your own work processes — feel free to reach out. I’m always happy to exchange ideas, explore possible use cases, or simply chat about where this fast-moving field might be headed.