"We can’t solve problems by using the same kind of thinking we used when we created them.” — Albert Einstein

✨ Executive Summary

In today's cloud-native landscape, Kubernetes clusters are living organisms—they scale elastically, handle countless daily configuration changes, and power everything from fintech to AI inference. Traditional human-driven security hardening simply can't keep up. This article introduces a fully agentic security pipeline that combines K8sGPT for comprehensive cluster scanning, LangChain-powered reasoning, Kyverno for policy enforcement, and GitOps for audit-grade delivery—all orchestrated through Slack.

By the end, you'll understand:

- How agentic translates to practical security engineering.

- How to implement a Slack-first workflow that observes → thinks → acts → validates while maintaining human oversight.

- Best practices for maintaining quality standards, eliminating redundant rules, and controlling LLM hallucinations.

- Key real-world trade-offs in latency, cost, and false positives—enabling you to discuss both strengths and weaknesses with confidence.

- Why LangChain's orchestration capabilities earn the term agentic.

TL;DR – While autonomy comes at a cost, properly configured agentic hardening can reduce remediation time from weeks to minutes while maintaining full Git-level traceability.

❓ Why Another Kubernetes Hardening Approach?

Most Kubernetes hardening guides culminate in security checklists: enable audit logging, set Pod Security Standards, block :latest, and so on. While these represent good hygiene practices, they depend on consistent human execution—and humans make mistakes.

Modern Kubernetes environments are highly dynamic. Every deployment, image update, and RBAC change introduces potential risk. Manual reviews can't keep up.

What we need is:

- Continuous security observability

- Automated reasoning and remediation

- Guardrails via GitOps and policy-as-code

This blog introduces a truly agentic hardening pipeline—a system where an AI agent automatically:

- Scans Kubernetes resources (via K8sGPT) and container images (via Trivy)

- Groups and interprets issues

- Generates Kyverno policies or patches

- Validates them

- Opens GitHub pull requests

While human reviewers maintain final oversight, the pipeline manages everything else.

What Makes This Agentic?

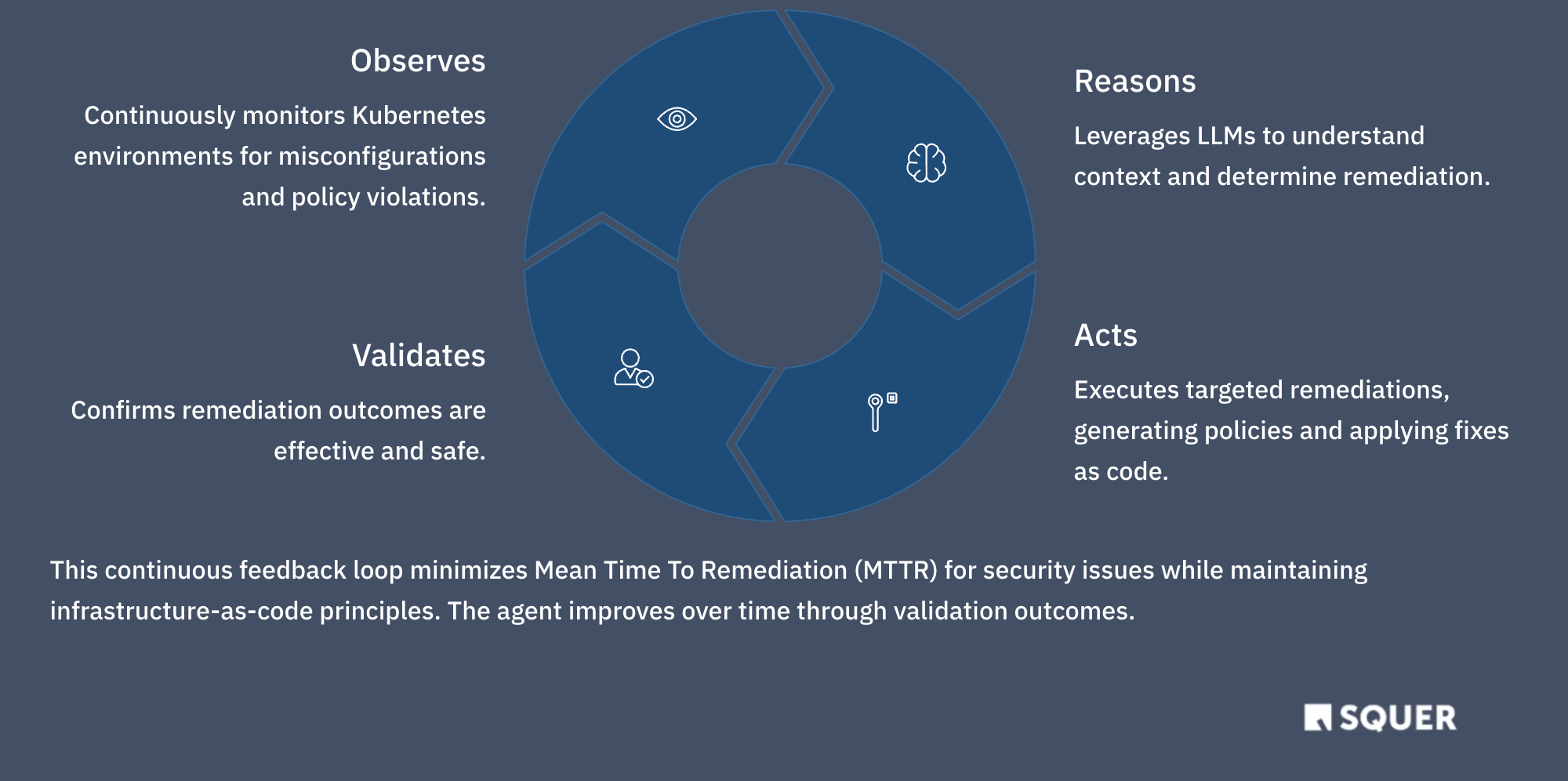

We define agentic behavior as:

"A system that observes state, reasons over it, makes decisions, takes action, and learns from results."

How does our pipeline embody that?

✅ 1. LLM Controls the Flow

- The LangChain agent selects appropriate tools (

generate_policy,generate_patch,validate_policy). - It determines the required YAML or JSON output format.

- It self-corrects when validations fail.

✅ 2. Reflexivity and Validation

- When

kyverno applyfails, the agent re-prompts itself with updated context. - This creates a complete feedback loop: plan → validate → adjust → replan.

✅ 3. Memory and Deduplication

- A vector DB stores previously merged policies.

- Cosine similarity prevents duplicate rules by detecting semantic matches.

✅ 4. Dynamic Routing

RouterChaindirects workflow based on issue type (e.g., CVE vs config).

📌 This aligns with HuggingFace and Anthropic's definition:

"The LLM drives the control flow, not just fills in templates. Link here: https://huggingface.co/docs/smolagents/v1.21.0/conceptual_guides/intro_agents"

Agentic vs Automated

🏗️ Solution Architecture (High Level)

🧬 Core Capabilities of Agentic Hardening Systems

- Perceptual Awareness – Can observe real-time cluster state via logs, CRDs, or API snapshots.

- Semantic Understanding – Can interpret context (e.g., difference between dev/test/prod misconfigurations).

- Goal-Directed Planning – Doesn't just fix problems—it prioritizes based on risk, blast radius, or SLAs.

- Tool Orchestration – Selects appropriate remediation mechanism: patch, Kyverno policy, or escalation.

- Action & Feedback Loop – Executes action, validates outcome (via

kyverno applyor live test), and re-learns. - Traceable Auditing – Maintains Git-based trace and Slack summaries for human-in-the-loop accountability.

🗄️ Governance & Escalation Flow

- Jira ticket linked via PR template

- Rollback : Argo “Auto Sync + Prune=false”, one-click revert.

🔒 Threat Scenarios & Mitigations

📊 Metrics & SLOs

Track with Grafana dashboards or Slack summaries.

🧪 Baseline Policy Quality Assurance

Security isn't magic. This pipeline incorporates essential safeguards:

- ✔ Schema-locked prompt outputs (Kyverno YAML)

- 🧪

kyverno applyvalidation against fixtures before PR - 🧠 Deduplication logic via vector similarity

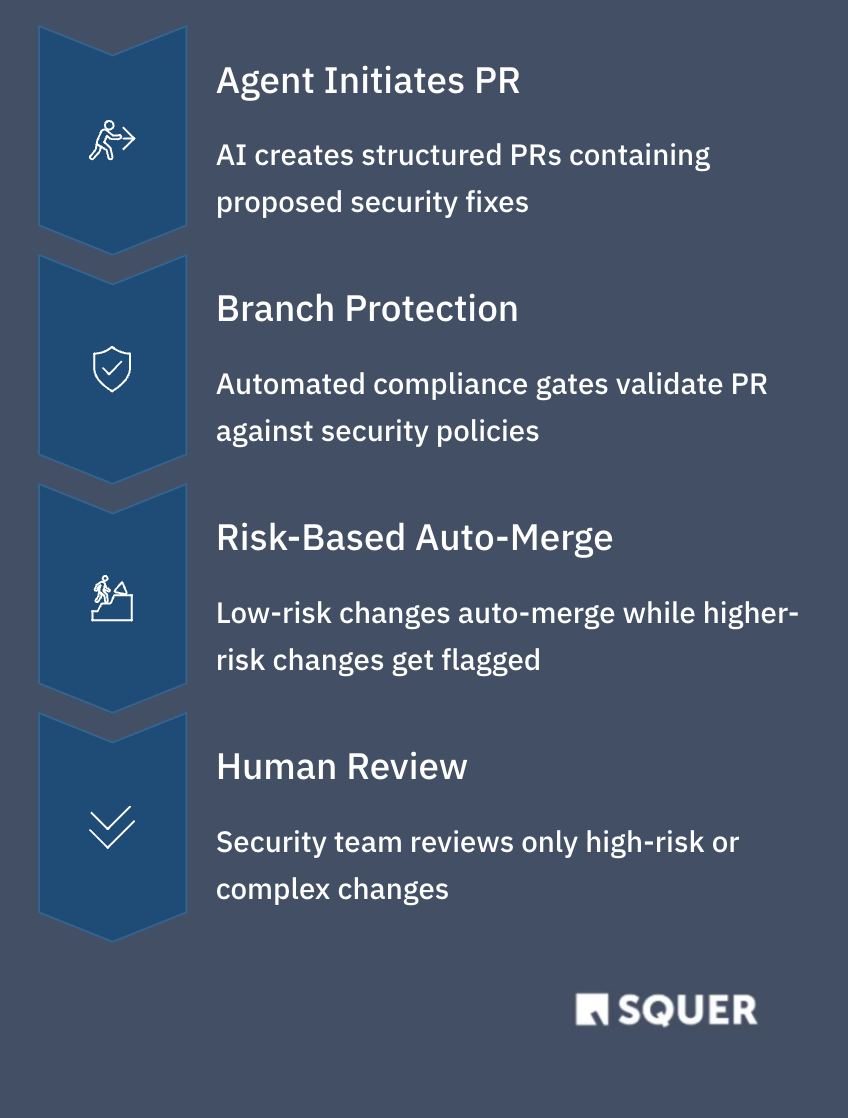

- 👤 Human-in-the-loop reviews for all PRs

- 🚦 Audit → Warn → Enforce progressive rollout

This addresses a crucial question: can different syntactic rules produce the same runtime effect? Yes—and our pipeline actively prevents such duplications.

✅ Changes only merge after passing tests and receiving reviewer approval.

🧰 Inside the LangChain AI Agent

🛠️ Toolset

🧭 Router Chain (simplified)

if issue.get("source") == "trivy":

action = generate_patch

elif issue["kind"] in {"Pod", "Deployment"}:

action = generate_policy

else:

action = escalate_to_human

🧠 Memory (Dedup)

Each generated policy or patch is embedded & stored; a cosine ≥ 0.9 hit aborts creation.

🔁 Self-Critique Loop

while True:

draft = action(issue) # LLM output

if validate_policy(draft): break # passes Kyverno

issue["error"] = "validation_fail"

draft = action(issue) # retry with context

Code Snapshot (conceptual scaffold)

⚠️ Disclaimer: This is a conceptual scaffold for education, not production-ready code. For a production‑ready implementation—complete with full fixture libraries, multi‑cluster support, and exhaustive logging—reach out to SQUER Engineering at Ankit Asthana and Tom Graupner

#!/usr/bin/env python3

"""Agentic K8s Hardening – scan ➜ reason ➜ fix ➜ PR"""

from langchain.agents import Tool, initialize_agent

from langchain_openai import ChatOpenAI

# Define tool wrappers

def scan_k8sgpt(): ...

def scan_trivy(): ...

def generate_policy(issue): ...

def generate_patch(issue): ...

def validate_policy(result): ...

def dedup_policy(result): ...

def open_pr(path, body): ...

TOOLS = [

Tool("scan_k8sgpt", scan_k8sgpt, "K8sGPT scan"),

Tool("scan_trivy", scan_trivy, "Trivy scan"),

Tool("generate_policy", generate_policy, "Kyverno policy"),

Tool("generate_patch", generate_patch, "JSON patch"),

Tool("validate_policy", validate_policy, "Kyverno validation"),

Tool("dedup_policy", dedup_policy, "Check duplicates"),

Tool("open_pr", open_pr, "Commit & push PR"),

]

agent = initialize_agent(TOOLS, ChatOpenAI(model="gpt-4o-mini"))

if name == "main":

log = agent.run("Scan cluster → dedup → fix → validate → PR")

print(log)

🖼️ Sample Implementation: AI-Driven Kubernetes Hardening in Action

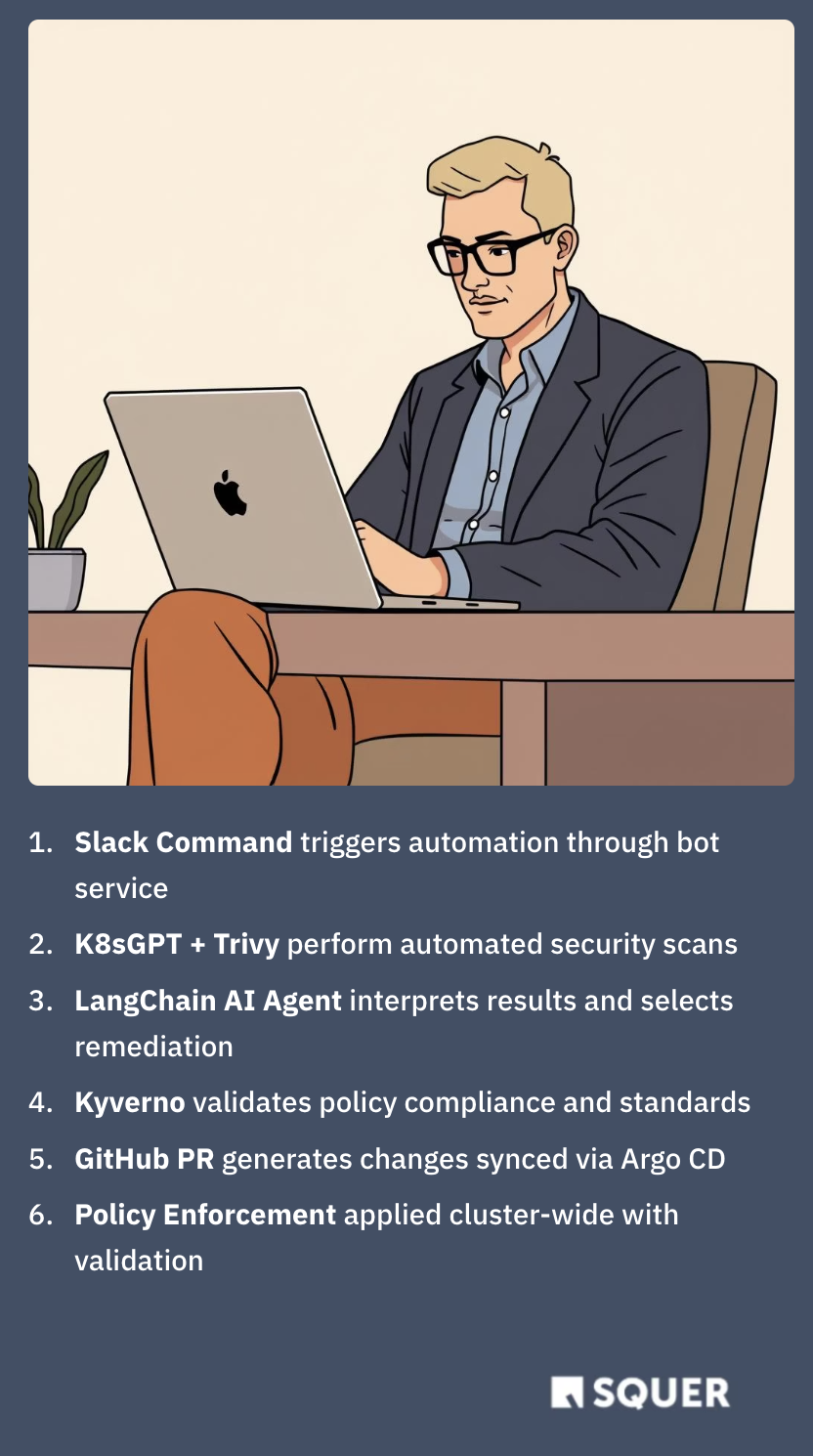

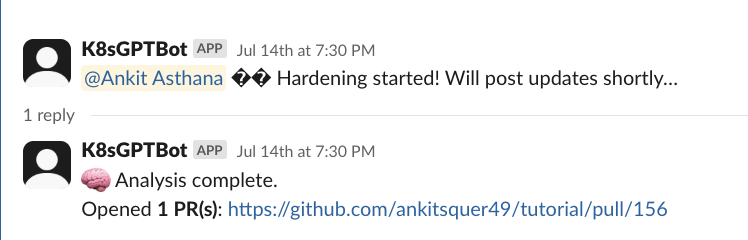

👉 💬 Hardening triggered directly from Slack

A user initiates the workflow with a simple Slack command against the Kubernetes cluster. The command hits an endpoint of the AI agent (running as a Flask app inside the cluster), which triggers the hardening workflow. For security, the setup enforces strict RBAC permissions on what the agent can execute and uses a scoped Slack bot token with limited rights to minimize risk

👉 🤖 AI agent proposes a recommended fix

The agent analyzes the cluster, identifies misconfigurations, and responds with a suggested remediation.

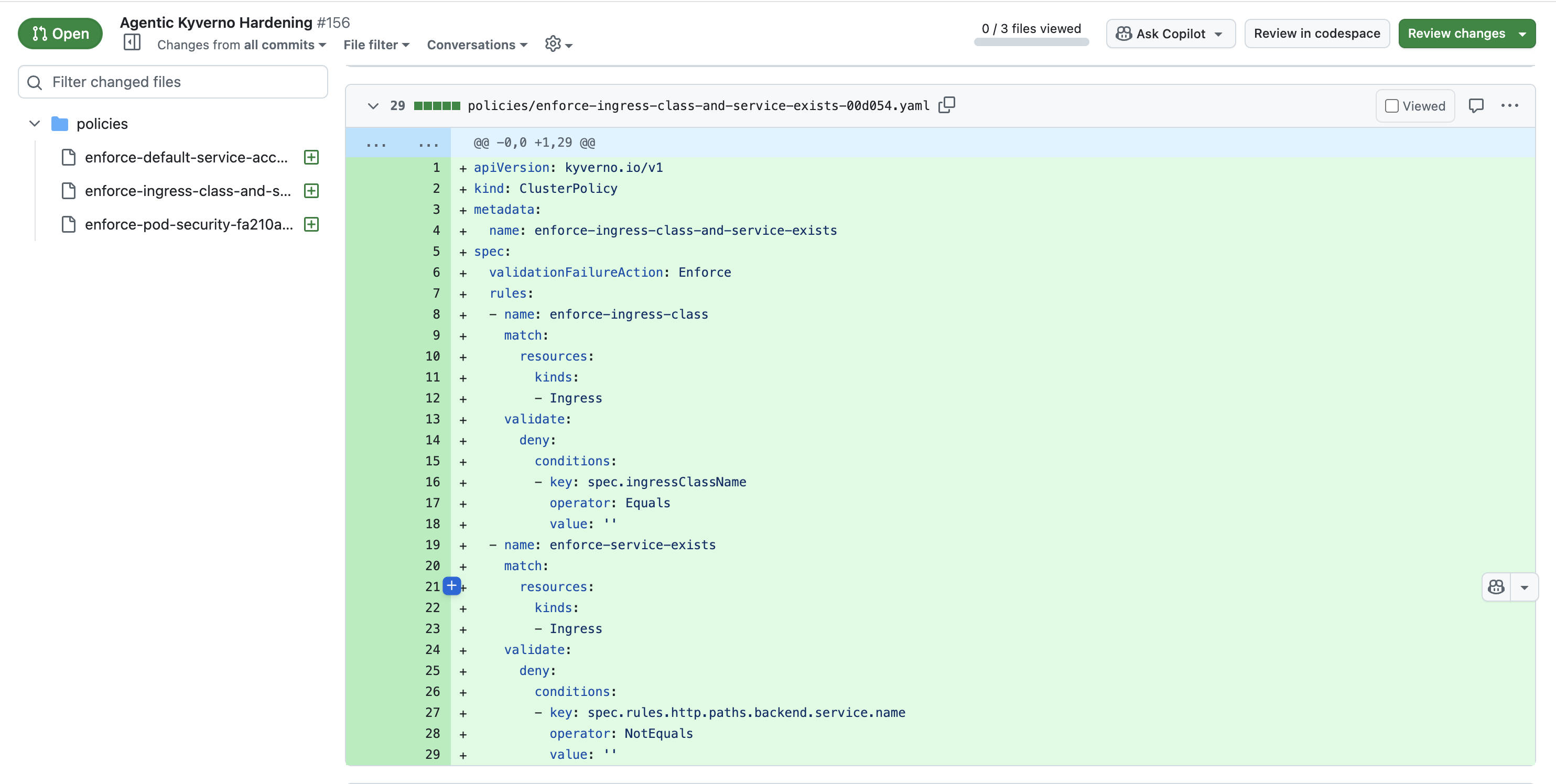

👉 📂 GitHub PR automatically created with a validated Kyverno policy

For example — if an Ingress resource is missing a backend or doesn’t specify an ingressClass, the AI agent will automatically generate a Kyverno ClusterPolicy to prevent such misconfigurations from being created.

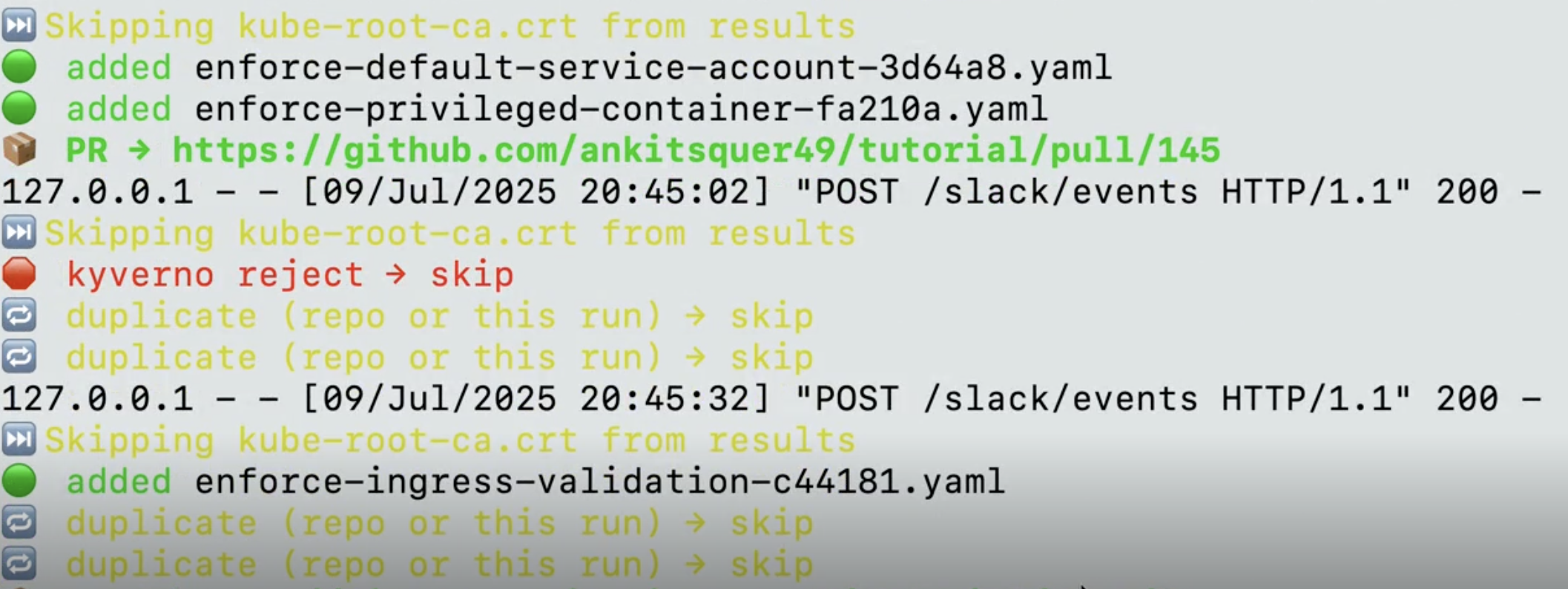

👉 🔁 Deduplication on subsequent runs

Once the PR is merged and deployed through ArgoCD, any future hardening runs on the same cluster will skip already-enforced policies. This deduplication logic ensures that duplicate findings do not generate unnecessary PRs.

🛠️ Implementation Guide

- Prerequisites – Kyverno CLI, K8sGPT, Trivy, GitHub token, OpenAI API key.

k8sgpt analyze -o json→ misconfig dataset.trivy k8s --format json→ CVE dataset.- LangChain agent routes, generates, validates, deduplicates.

- Passing fixes are committed & PR opened; branch protection + reviewers gate merge.

- Argo CD (or Flux) syncs merged policies to the cluster.

💡 Tip: start in --audit mode; promote to warn/enforce after drift stabilizes.

✋ Final Reminder

This snippet illustrates the flow, not a drop-in production service.

Add proper error handling, retries, auth rotation, multi-cluster tenancy and observability before real-world use.

🔐 Security & Compliance Checklist

🧪 Operational Best Practices

- Schedule nightly runs or trigger via

/agentic-scancommand - Begin with

auditmode and gradually promote policies based on violation rates - Maintain test fixtures for all rule categories

- Track performance through metrics (

kyverno_policy_violations_total) - Clean up outdated PRs when superseded by newer policies

⚖️ Trade-offs & Considerations

No system is perfect—this pipeline is powerful, but requires engineering maturity to operate safely.

🔮 Future Extensions

- RL-FI: Reinforcement Learning from Incidents

- CVE graphs: Map image lineage to policies

- Multi-cluster fleet support

- RLHF + feedback tuning for false positive suppression

🧾 Conclusion

Gone are the days of spreadsheets, scattered scripts, and tedious manual security reviews.

In their place stands an AI agent that vigilantly monitors your cluster, crafts Kyverno rules, validates them, submits pull requests, and awaits human approval.

This isn't mere automation—it's agentic security.

Begin gradually: examine the reference code, test in a single namespace, and allow the system to demonstrate its reliability.

The future of Kubernetes security has arrived, and it's autonomous, auditable, and human-approved.

💬 Quote

🔐 "Security is not a product, but a process."— Bruce Schneier, renowned cryptographer and cybersecurity expert

📚 References

- K8sGPT: https://github.com/k8sgpt-ai/k8sgpt

- Trivy: https://github.com/aquasecurity/trivy

- Kyverno: https://kyverno.io

- LangChain: https://python.langchain.com

- Argo CD: https://argo-cd.readthedocs.io

- Cosign: https://github.com/sigstore/cosign

🤝 Let's Connect — Bring Agentic Kubernetes Hardening to Your Team

At SQUER, we're passionate about empowering teams to adopt practical AI-driven security solutions for cloud-native environments. Whether you're:

- Exploring K8sGPT for intelligent cluster scanning

- Implementing LangChain- and Kyverno-powered remediation

- Seeking a live demo or internal workshop on agentic security pipelines

—we'd love to help.

📬 Ready to dive deeper?

Connect with us at squer.io to explore:

- 🏫 Custom workshops and enablement sessions

- 🔐 Secure AI adoption strategies for SRE and Platform teams

- ⚙️ End-to-end agentic hardening pipeline implementation

- 📊 Platform security and reliability assessments

-

Contact us:

- 📨 Ankit Asthana — ankit.asthana@squer.io

- 📨 Tom Graupner — tom.graupner@squer.io

-

Let's build autonomous, auditable, and human-approved Kubernetes security—together.